ACM SIGGRAPH / Eurographics Symposium on Computer Animation (SCA) 2024

MCGILL UNIVERSITY, MONTREAL, CANADA

Learning to Play Guitar with Robotic Hands

Abstract

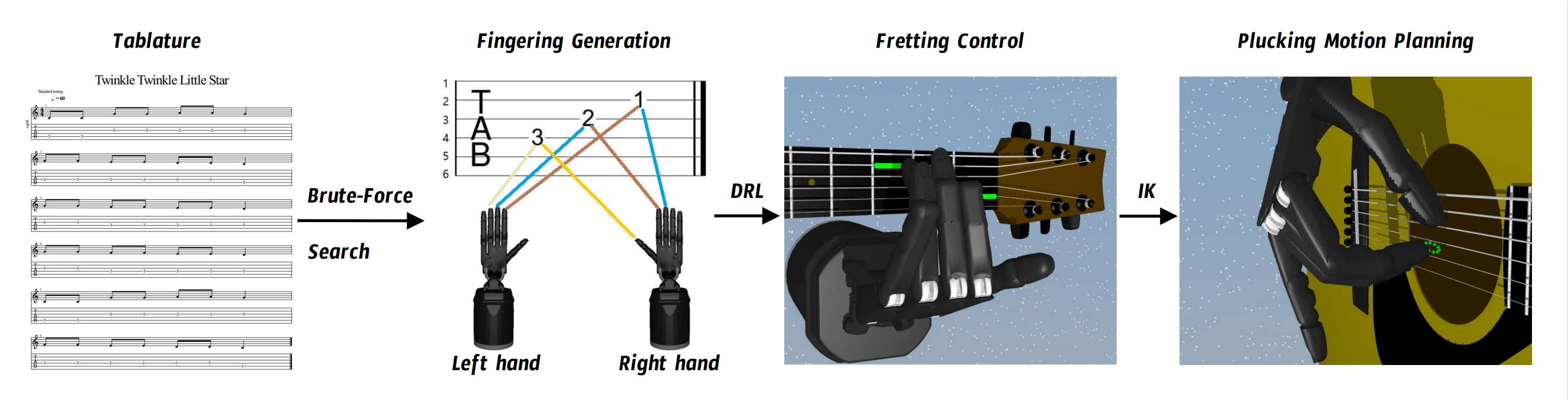

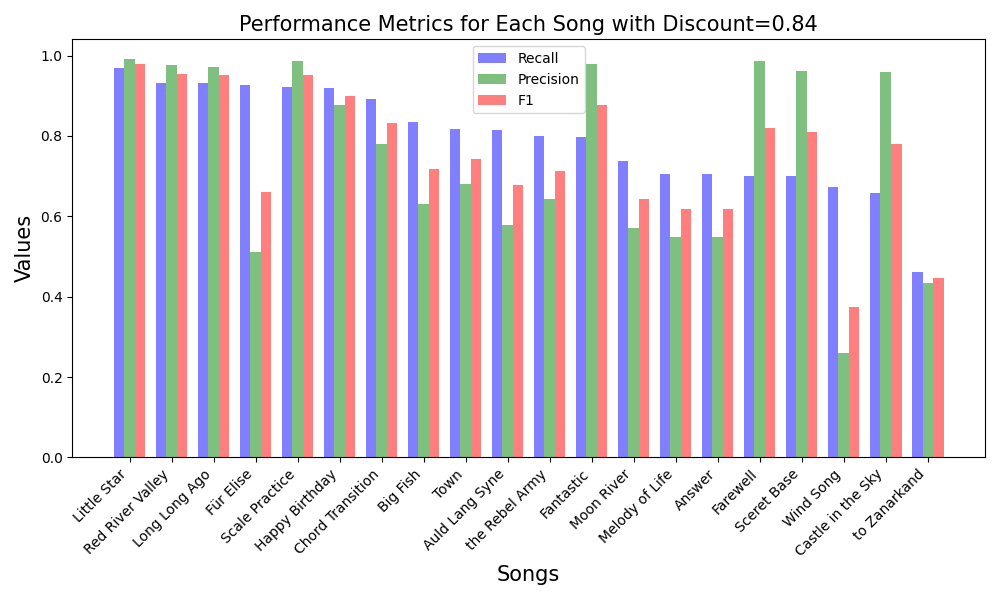

Playing the guitar is a dexterous human skill that poses significant challenges in computer graphics and robotics due to the precision required in finger positioning and coordination between hands. Current methods often rely on motion capture data to replicate specific guitar playing segments, which restricts the range of performances and demands intricate post-processing. In this paper, we introduce a novel reinforcement learning model that can play the guitar using robotic hands, without the need for motion capture datasets, from input tablatures. To achieve this, we divide the simulation task for playing guitar into three stages. (a): for an input tablature, we first generate corresponding fingerings that align with human habits. (b): based on the generated fingerings as the guidance, we train a neural network for controlling the fingers of the left hand using deep reinforcement learning, and (c): we generate plucking movements for the right hand based on inverse kinematics according to the tablature. We evaluate our method by employing precision, recall, and F1 scores as quantitative metrics to thoroughly assess its performance in playing musical notes. In addition, we conduct qualitative analysis through user studies to evaluate the visual and auditory effects of guitar performance. The results demonstrate that our model excels in playing most moderately difficult and easier musical pieces, accurately playing nearly all notes.

Overview

For a given input tablature, our system utilizes a brute-force algorithm to generate the fingering for playing the guitar. Subsequently, it employs Deep Reinforcement Learning (DRL) to train a policy to control the left hand pressing the strings, while using Inverse Kinematics (IK) to control the right hand plucking the strings.

Results

We collect multiple pieces online as a training dataset. After testing, it is satisfying that most pieces achieve a recall rate above 0.7, which means the majority of played pieces are accurately identified by the audience.